Dopamine: Everything you need to know

This is my attempt to give a more or less complete, up-to-the-minute account of what we know about dopamine. It's long, but it's thorough.

It's official: based on google trends data, dopamine is the most popular neurochemical of them all. Serotonin was number one up until 2021, but since then, dopamine has ruled the roost.

Intuitively, this feels about right: awareness of behavioural addictions and the role dopamine plays in them has risen a lot in recent years. It also just feels like more people than ever before – people otherwise completely innocent of neuroscience – are using the word dopamine colloquially to refer to something like 'a neural correlate of pleasure'.

Despite this rise in popularity – and despite my searching – never have I actually come across any content that gives a comprehensive accounting of what dopamine is or how it works. Given how apparently important a neurochemical dopamine is, this is surely a gap worth plugging.

This post – the fruit of far more time and research than I had originally planned – is my attempt to do exactly that.

Turns out there are a number of widespread misconceptions about exactly what dopamine does and doesn't do. In this post, I’m going to try and dispel all of them while presenting an up-to-the-minute picture of what we do actually know about dopamine.

Here goes.

P.S. For the sake of brevity and readability, I'm going to assume readers have a basic understanding of neuroscience. For anyone who finds themselves struggling with certain parts, here's a fairly succinct neuroscience primer that will hopefully give you the rudiments needed to make sense of everything I say here.

P.P.S. I’ve done so much research – and come across so many interesting findings – that I'm reluctant to leave any of it out. I’ve therefore thrown in an appendix at the bottom of this piece, where I've included all of the cool, slightly peripheral things I've discovered during my travels. I’ll also be doing a follow-up post where I’ll be discussing the plausibility of dopamine fasts. Stay tuned.

Dopamine and the dopaminergic system

In the interest of not ruining the flow of later parts of this post, I'm going to do a little bit of an exposition dump here to give you the basic knowledge you need to understand later sections. Where possible, I'll keep this sketch fairly low-resolution, because I don't want to burden you with details that don't actually enhance your understanding of how this stuff works.

So:

Dopamine is a signalling molecule in the brain known as a neurotransmitter. For a long time, researchers thought that dopamine was just a precursor of noradrenaline – another important neurotransmitter – but it turns out dopamine is directly involved in a range of important phenomena and pathologies, including movement, learning, motivation, habit-formation, addiction, parkinsons, huntingtons, and more.

There are certain neurons, i.e. nerve cells, in the brain that produce dopamine. When these neurons are activated in specific ways, they release that dopamine into the space outside the cell, i.e. the extracellular space, where it binds with receptors on other neurons and thereby influences their firing patterns.

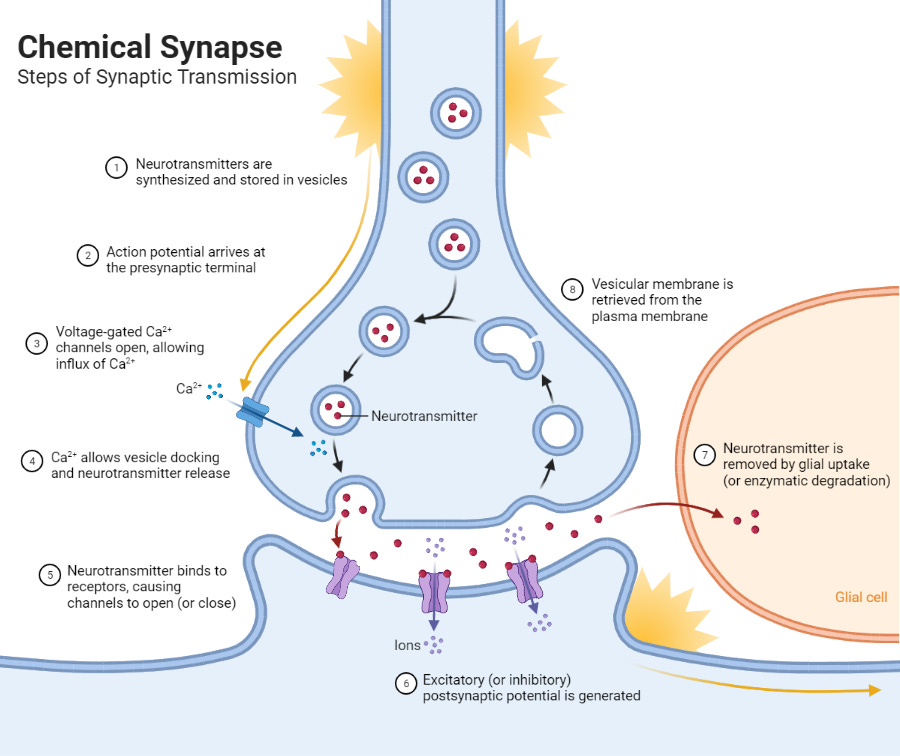

With many other types of neuron, the upstream neuron – otherwise known as the presynaptic neuron – pretty much locks directly onto the downstream neuron – i.e. the postsynaptic neuron. The tiny space between these two cells, into which the neurotransmitter is released, is known as the synapse, and this kind of intercellular communication is called synaptic transmission.

With dopamine neurons, on the other hand, the majority of their signalling seems to be done via volume transmission, which is essentially where they release a ton of dopamine into the space outside the cell, without targeting any specific downstream neurons or receptor sites in particular.

Volume transmission is way less precise than synaptic transmission, but it comes with a number of advantages that allow dopamine to do its job. One quick example: there’s evidence that dopamine is involved in learning (to be discussed soon) and that it plays a role in promoting plasticity (2). By being sprayed far and wide, dopamine may be able to identify and strengthen certain recently active neural pathways associated with behaviours that preceded some kind of a reward.

To make things slightly more complicated, there are 5 specific types of receptors that dopamine can bind to, named D1 through to D5. D1 and D5 are generally classed in the D1-like family; and D2, D3, D4 are classed in the D2-like family.

Dopamine's effects on downstream neurons depends on the specific receptors that those neurons have built into their cell membranes. As I understand things, each downstream neuron will only have one class of dopamine receptor, i.e. either D1-like or D2-like receptors, which makes things a bit easier for us to grasp.

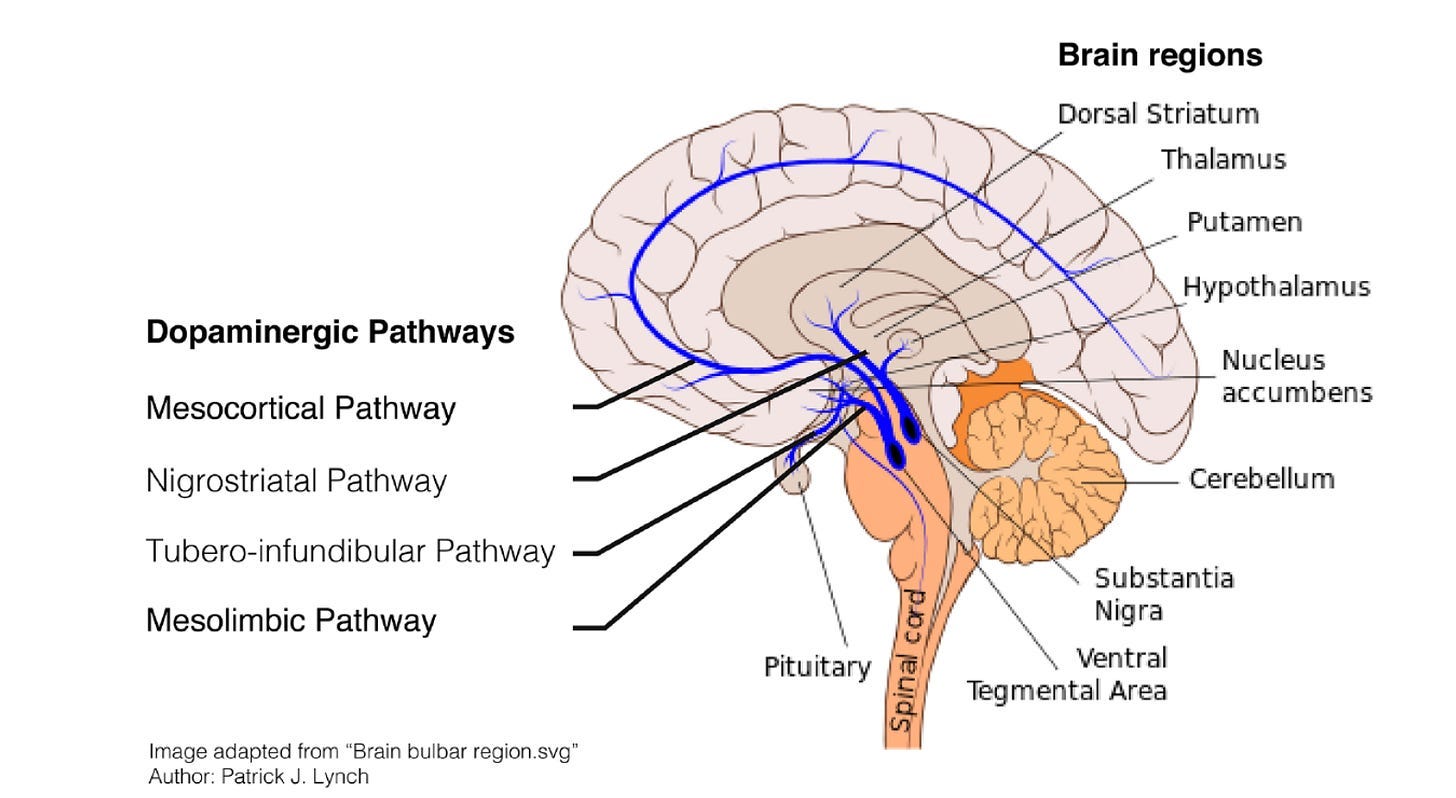

Although there are dopamine-containing neurons distributed all across the central nervous system – including the retina, the olfactory bulb, and the periventricular hypothalamus – the two most important populations, or nuclei, are located next to each other in the midbrain:

1. The substantia nigra (SN)

2. The ventral tegmental Area (VTA)

The first population in the SN connects mostly to the dorsal (i.e. top part of the) striatum. Known as the nigrostriatal pathway, this pathway is mostly involved in action-selection, habits, and goal-directed behaviour.

Degeneration of the dopamine-containing cells in the substantia nigra is all that's needed to cause the motor deficits associated with Parkinson's.

The second population in the VTA connects mostly to the ventral (i.e. bottom part of the) striatum, as well as various parts of the prefrontal cortex (PFC). Known as the mesocorticolimbic pathway, this pathway is mostly involved in movement, motivation, and reinforcement learning.

One thing worth knowing is that there’s an important population of neurons in the ventral striatum called the nucleus accumbens (NA). Throughout this article, you can pretty much assume that the terms ventral striatum and NA are being used interchangeably, since the NA seems to be (by far) the most important part of the ventral striatum insofar as dopamine is concerned.

Here's a diagram to clear some of this up:

(Note: this diagram treats the mesocortical and the mesolimbic pathways as two separate things, but in this post (and in much of the academic literature, too) we’re treating them as a single pathway – hence ‘mesocorticolimbic’. Also, ignore the tubero-infundibular pathway – it isn’t important for what we’re talking about here.)

It seems that there's evidence for there being more overlap between the mesocorticolimbic and nigrostriatal pathways than initially believed (3), but for our purposes here, let's just leave it there for now.

There are one or two additional features of the dopamine system's neuroanatomy that it will be worth your knowing, but in the interest of not getting too bogged down in detail at the start, this gives us enough to get going.

Quick summary:

Dopamine is a signalling molecule in the brain

Unlike certain other neurotransmitters, e.g. glutamate, which are distributed via precise synaptic transmission, dopamine is mostly distributed via volume transmission

It is primarily produced by two populations of neurons found in the SN and the VTA

Dopamine neurons in the SN connect mostly to the dorsal striatum, giving rise to the nigrostriatal pathway; those in the VTA connect mostly to the ventral striatum and PFC, giving rise to the mesocorticolimbic pathway

There are two main classes of dopamine receptors: D1-like receptors and D2-like receptors

Dopamine and pleasure

As mentioned in the intro, when most people talk about dopamine, they seem to be talking about something like 'a neural correlate of pleasure'. In other words: when I eat chocolate, my brain releases dopamine, and it is this dopamine release that causes, or is correlated with, my sensation of pleasure.

While this was once – briefly – the accepted scientific view, it isn't any more. Here's the full story:

In 1954, it was discovered that rats and humans will voluntarily administer brief bursts of weak electrical stimulation to certain sites in their brains. This phenomenon is known as intracranial self-stimulation, and it was originally theorised that parts of the brain that people and rats choose to self-stimulate may be involved in the generation of pleasure – hence why we choose to stimulate them (1).

From there, the race was on to map these sites and establish the 'pleasure' circuitry of the brain (1).

After not too long, it became apparent that the mesocorticolimbic pathway – as discussed above – and dopamine were deeply implicated in intracranial self-stimulation. Four pieces of strong evidence for this:

Many of the brain sites at which brain stimulation occurs are part of the mesocorticolimbic pathway (1).

Intracranial self-stimulation often causes an increase in dopamine release in the mesocorticolimbic pathway (1).

Dopamine agonists, i.e. drugs that enhance the effects of dopamine, tend to increase intracranial self-stimulation; and dopamine antagonists, i.e. drugs that inhibit the effects of dopamine, tend to decrease it (1).

Lesions of the mesocorticolimbic pathway tend to disrupt intracranial self-stimulation (1).

As a result of all this data, it was suggested that dopamine is a key player in the generation of pleasure in the brain – a hypothesis known as the 'dopamine pleasure' or 'dopamine hedonia' hypothesis.

We now have some pretty good evidence that this is almost certainly wrong.

In fact, even Roy Wise, the guy who originally proposed the hypothesis (and who was also involved in a lot of the intracranial self-stimulation research), has come out and said ‘‘I no longer believe that the amount of pleasure felt is proportional to the amount of dopamine floating around in the brain’’ (6).

Here's why he (and pretty much everyone else) thinks this:

It turns out that what we typically think of as pleasure can be broken down into three constituent neurobiological processes (5):

Liking – the actual pleasure or hedonic component of a reward. This is what we normally think of when we use the word pleasure.

Wanting – motivation for a reward. It's possible, as we will soon see, to want something that is not pleasurable or, likewise, to not want something that is.

Learning – associations and representations about future rewards based on past rewards, e.g. I have a chocolate bar, I learn that chocolate is enjoyable, ergo next time I encounter chocolate, I want it.

In essence, wanting happens when you start to desire and pursue a specific thing; liking happens while you are consuming that thing; and learning happens throughout, because you're learning about both the cues and behaviours that preceded the pleasurable thing, as well as the intensity of the pleasure that thing produces.

It can be quite tricky to tease these components apart because rewarding stimuli, e.g. a chocolate bar, tend to activate all three components more or less simultaneously. Saying that, neuroscientists have developed some quite smart ways of doing this, which to my mind aren't exactly perfect but that seem to (mostly) get the job done. Here's how:

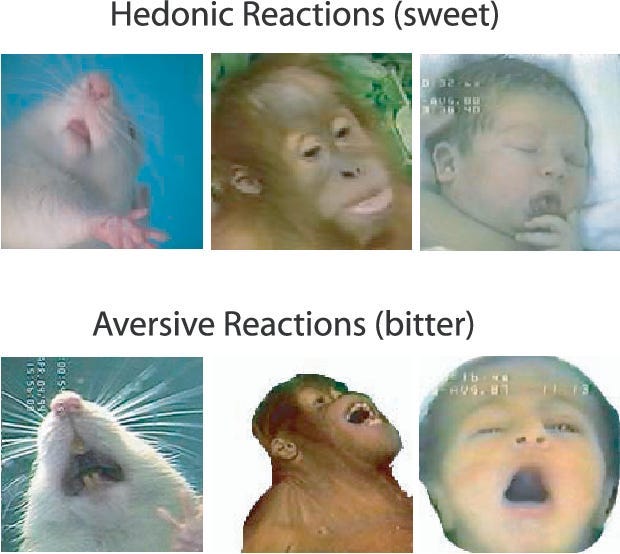

Almost all species of animal, including humans, show objective signs of liking something. For example, if you give a baby a sweet taste, they’ll consistently relax their facial muscles; if you give them a bitter taste, they'll produce a scrunched up, disgusted reaction. The same is true of a whole host of animals, including apes, monkeys, and mice (6).

By measuring the intensity of their objective liking reactions, we can essentially get a read on how much an animal actually likes, i.e. enjoys, something.

Anyway, back to dopamine and why we're now convinced that it’s not the cause of pleasure:

Rats with almost complete destruction of their mesocorticolimbic and nigrostriatal neurons via lesions show no difference whatsoever in orofacial liking reactions to sweet tastes. In other words, you remove all the neurons in their brains that produce dopamine and they still seem to experience liking reactions (6).

In humans, Parkinson's sufferers whose dopamine neurons have been more or less completely destroyed give normal hedonic ratings to the sensory pleasure of sweet tastes (6).

In humans, subjective pleasure ratings of cocaine aren't reduced by pharmacological disruption of the dopamine system – but, interestingly, 'wanting' ratings are (6).

Dopamine elevations in the NA (recall, a part of the ventral striatum) don’t increase liking for a sweet taste despite increasing motivational wanting to obtain the reward (6).

Finally, here's a particularly interesting study that sort of summarises why we believe dopamine plays no role in liking – and that also hints at what it does actually seem to do (10):

Berridge and his team used mice with a dopamine transporter knockdown mutation to investigate the effects of dopamine in Liking and Wanting. This mutation essentially means that the mutant mice have 70% elevated levels of extracellular dopamine in the striatum, so by comparing their reactions and performance in specific tasks with those of wild mice with normal dopamine levels, these researchers were able to get a read on what dopamine is actually up to.

In the first part of the experiment, they simply gave these mice pretty much unlimited access to food and water over a four week period and monitored their consumption levels. It was found that the mutant mice with elevated dopamine ate and drank slightly more than their wild-mice colleagues. Interesting result but nothing shocking.

The next part of the experiment involved a runway task, which is basically where you monitor the speed, trajectory – and other slightly more qualitative things – at which an animal traverses a runway in order to acquire some kind of rewarding stimulus. In this experiment, the rewarding stimulus was a sweet breakfast cereal that the mice had already been habituated to.

As shown in the graphs above, after the initial goal pre-exposure phase (in which the mice were simply placed in the ‘goal box’ at the end of the runway and given access to the cereal), the mutant mice traversed the runway much more quickly than the wild mice, and they reached peak performance much more quickly too.

Digging deeper, researchers found that the mutant mice left the start box more quickly, required fewer trials to learn, paused less often in the runway, were more resistant to distractions, and proceeded more directly to the goal,

These results might lead you to believe that mutant mice had an increased capacity for pleasure when compared with the wild mice, hence why they – the mutant mice – were apparently more eager to obtain the reward.

However, in the final part of this experiment, the researchers used taste reactivity tests to measure the objective liking reactions of these mice in response to a sweet sucrose taste. Turns out that these mutant mice did not show enhanced liking reactions at all. In fact, at higher concentrations of sucrose, it appeared that the mutant mice liked the sweet taste less than the wild mice.

So, in short: higher extracellular dopamine levels as seen in the mutant mice seem to be linked to increased wanting – i.e. motivation – but not increased liking – i.e. pleasure.

Tldr; the Dopamine Pleasure Hypothesis is almost certainly wrong.

Dopamine and learning

In the 70s, when it became possible to completely lesion dopamine pathways in the brain, many experts believed that dopamine's main role was tied to movement and behavioural activation, mainly because lesions of dopamine pathways resulted in significant reductions in movement. This hypothesis fit nicely with the observation that conditions associated with damage to dopamine neurons, e.g. things like Parkinsons and Encephalitis, often result in a reduction or even complete loss of certain motor functions (2).

Unfortunately, there were a few refractory pieces of evidence that refused to fit with this theory. For one, animals with complete dopamine pathway lesions could still swim if you put them in water. Also, akinetic patients with severe damage to their dopamine neurons could still respond if there was a fire alarm (2).

This tension was eventually resolved thanks to a series of pioneering studies that involved recording dopamine neurons in the VTA.

In one study, Wolfram Schultz and colleagues trained monkeys to expect juice at a fixed interval after a visual or auditory cue was presented. For example, every time a specific light flashed, juice would be delivered twenty seconds later. While they were doing this, these researchers recorded the firing rates of dopamine neurons in the VTA (8).

They found that before the monkeys had learned the cues (and the juice was therefore unexpected), dopamine neurons fired above baseline levels when the juice was delivered. However, once the monkeys learned that certain cues predicted the juice, the timing of the firing changed: neurons no longer fired in response to the presentation of the juice – the reward. Instead, they responded earlier, when the predictive visual or auditory cue was presented, e.g. the flashing light (8).

As I understand things, these findings were initially interpreted as confirming the then-in-vogue movement theory of dopamine, i.e. that the rewarding stimuli triggered immediate movements that showed up as spikes in dopamine firing (2).

However, a number of additional findings from the study pointed to an alternative interpretation:

If the cue was presented but the reward was withheld, firing paused at the time the reward would have been presented, sending firing rates below baseline.

If a reward exceeded expectation or was unexpected (because it appeared without a prior cue), firing rates were enhanced.

If the reward was exactly as expected, firing patterns upon receipt of the reward would stay more or less steady – neither a spike nor a dip.

A decade after the paper's original publication, its findings were reinterpreted and the Reward Prediction Error (RPE) theory of dopamine was born. Under the RPE view, the absolute size of a reward does not dictate the firing rates of dopamine neurons; instead, it is the difference between the size of the actual reward and the expected reward that counts.

The RPE idea originated in the computer science field of reinforcement learning, where RPEs were used as a learning signal to update the estimated values of future rewards – estimates that could be used to guide future behaviour. Since dopamine cell firing resembles RPEs, and since RPEs – in the context of reinforcement learning, at least – are used for learning, it became common to think of dopamine as a learning signal.

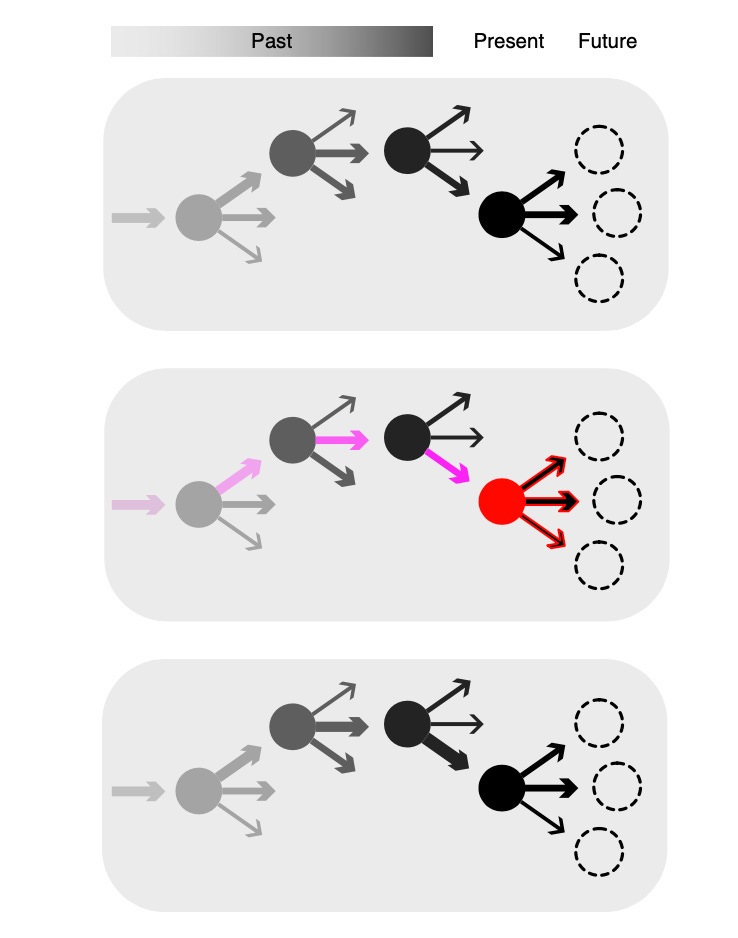

Here’s a nice diagram to explain how this kind of learning seems to work:

Circles represent specific states, and the arrows represent potential actions from those states. Arrow widths represent the learned values of each action. In the second panel, the red circle represents reward and dopamine release, which then retroactively updates the values of the actions that preceded the reward (shown in pink). The bottom panel represents the updated values (changes in thickness) that result from the learning that occurred in the second panel.

So, to give an example: let’s say that I’m in my bedroom lying on my bed. This situation represents a ‘state’. I could do a number of things, but my past learning suggests that playing xbox is likely to be the most rewarding thing I can do in this state. I therefore choose to play xbox, and this activity is indeed rewarding – more so than expected – which results in a dopamine spike. As a result of this dopamine spike, the value associated with playing xbox increases, which means that the next time I encounter this state, I am more likely to select to play xbox once again.

In addition to nicely explaining the results of the Schultz experiment, the RPE theory of dopamine has a number of other things to recommend it:

The RPE hypothesis fits nicely with the finding that dopamine modulates plasticity, i.e. cell change, in the striatum. For example, we now know that dopamine release in the striatum (along with a few other coincident factors) causes dendrite spines to grow (2).

Addictive drugs also boost striatal dopamine release, so the RPE theory fits in with this too: the drug consistently creates a positive RPE, forcing the brain to continually update its value estimate associated with the drug (2).

Even serious akinesia (inability to voluntarily move one's muscles and limbs) can be explained by the RPE theory: lack of dopamine may be treated as a constantly negative RPE that progressively updates all values to zero (2).

Also, since these initial experiments, optogenetic studies have confirmed the dopaminergic identity of RPE-coding cells, and showed that they do in fact modulate learning (2).

Reconciling learning and motivational theories of dopamine

As far as I can tell, the RPE theory of dopamine has dominated the field for the last twenty to thirty years, but as we saw earlier, there’s also good evidence that dopamine is closely tied up with motivation – i.e. wanting – as well.

Here's some more evidence in support of that claim:

Recent genetic studies have shown that dopamine-deficient mice displayed a complete lack of motivation for engaging in goal-directed behaviours, including feeding (3).

Likewise, in dopamine deficient mice, restoration of dopamine signalling in the dorsal striatum results in restored motivation and feeding behaviour (3).

Microdialysis (used to measure dopamine concentrations) shows a positive correlation between dopamine in the NA core and locomotor activity (as well as other indices of motivation) (2).

Optogenetic stimulation of VTA dopamine cells makes rats more likely to begin work in a probabilistic reward task, as if they had greater motivation (2).

Optogenetic stimulation of the SN core dopamine neurons or their axons in dorsal striatum increases the probability of movement. These behaviour effects are observable within a couple hundred milliseconds of optogenetic stimulation (2).

One reason why it’s been quite tricky interpreting the literature on dopamine is that one large part of it seems to only talk about the RPE theory, without any mention of the motivation side of things; and the other albeit smaller part of the literature does the opposite.

There are, however, one or two papers that bridge this gap and that point to the possibility – as well as the difficulty – of reconciling these two views (2).

One of the traditional ways that neuroscientists have tried to do this is by arguing that the learning and motivation roles of dopamine happen on different timescales. At baseline, dopamine neurons tend to fire at a few spikes per minute – this is known as tonic firing. When they’re triggered, on the other hand, e.g. by a rewarding stimulus resulting in an RPE, their firing rate increases rapidly – this is known as phasic firing.

The traditional theory has been that slow changes in tonic firing are responsible for general motivation and that fast phasic firing is responsible for learning.

But here’s the issue with this ‘tonic firing = motivation, phasic firing = learning’ theory:

Many of the methods that have been used to establish a relationship between slow-changing, tonic dopamine levels and motivation have suffered from extremely low temporal resolution. So, for example, with microdialysis, the length of the sampling intervals is usually a couple of minutes, meaning, as I understand things, that we don’t get fine-grained information about how dopamine levels are fluctuating on a second-by-second or even subsecond-by-subsecond basis. In other words, just because microdialysis measures dopamine levels slowly does not mean that these levels are actually changing slowly.

In a bid to fill this gap in knowledge, Hamid et al examined rat NAc dopamine levels in a probabilistic reward task using both microdialysis and fast-scan cyclic voltammetry (a technique with much finer temporal resolution) (2). Here’s what they found:

Dopamine in the NAc, as measured by microdialysis, correlated with reward rate (rewards per minute), but even with improved microdialysis temporal resolution, which uses sample intervals of 1 minute, dopamine still fluctuated as fast as they could sample it. When they used voltammetry, however, which has a much finer temporal resolution, they observed a tight relationship between subsecond dopamine fluctuations and motivation.

So, in essence, dopamine levels, which correlated closely with reward rate/motivation, were fluctuating extremely quickly, suggesting that the whole ‘phasic firing = learning, tonic firing = motivation’ theory is probably wrong.

But here’s another cool thing the study showed:

As rats performed a sequence of actions in order to obtain a reward, dopamine levels rose progressively higher and higher as they moved towards the reward, reaching a peak just as they obtained the reward.

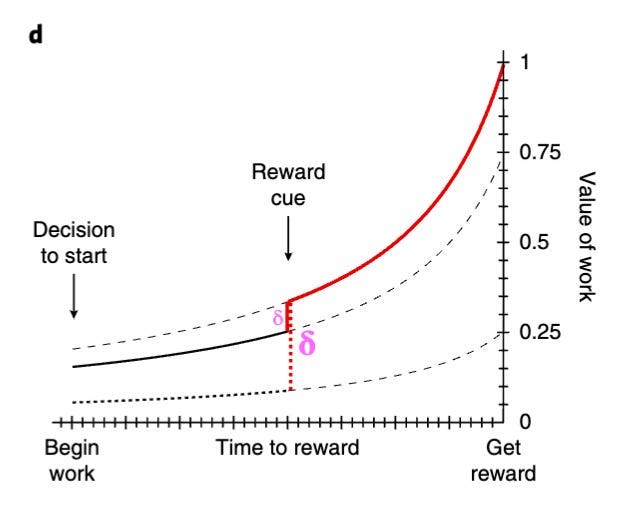

These researchers showed that dopamine levels correlated strongly with instantaneous reward value (or ‘value’, as they call it), which is defined as the expected reward minus the expected time needed to receive it. It has therefore been suggested that motivation levels are essentially equal to ‘value’.

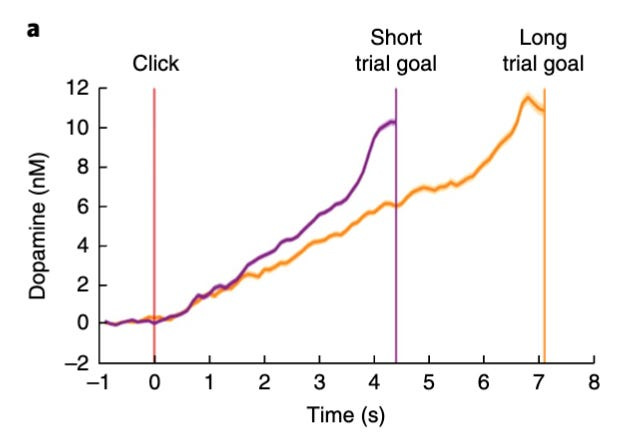

To see what all of this looks like in practice, take a look at the chart below:

These findings are consistent with voltammetry results from other studies, which also showed that dopamine levels climb with increasing proximity to reward – a phenomenon known as ramping.

One other interesting finding was that as the subjects received cues suggesting that rewards were either larger, closer, or more certain than previously expected, the dopamine ramp shifted upwards – representing an increase in motivation (see graph below). It has been suggested that these upward shifts in the dopamine curves represent what essentially amount to RPEs.

As such, it seems that dopamine might indicate the value of work – consistent with which is the finding that dopamine increases with signals instructing movement but not signals instructing stillness, even when such signals indicate similar future rewards (2).

Interestingly, this finding actually aligns with many earlier experiments that used classical conditioning tasks (like the Schultz one discussed above). After all, if the task simply involves the animal remaining still while cues and rewards are presented to them, then there is no benefit to active work. Since dopamine is posited to signal value of work, then dopamine ramps observed by Hamid and others are not expected to occur in these classical conditioning studies – which, of course, they don’t!

One question you might still have is ‘how are both of these signals, i.e. learning and motivation, encoded by the same circuitry?’ I don’t want to get bogged down in the minutiae here, so I've added a section in the appendix where I quickly run through an interesting – and, by the looks of things, plausible – hypothesis as to how this might work. For those interested, see the ‘Switching between learning and motivation’ section.

Bringing this all together: What does dopamine mean?

So, we now know that dopamine appears to encode two things: first, the expected value of a reward, discounted by expected time needed to receive it. Second, reward prediction errors. Or, in other words, motivation and learning.

Unfortunately, digging deeper, microdialysis studies show that dopamine is only correlated with the expected value of a reward in the NAc and ventromedial frontal cortex, but not in other parts of the striatum and frontal cortex. This kind of finding challenges the idea that there is a global dopamine message that produces similar effects across the brain.

From such observations, we might well conclude that there are all kinds of different dopamine functions and that this is just one neurochemical that won’t be corralled into a neat and parsimonious theory.

However, thankfully there does appear to be a slightly more elegant explanation that chimes with other things we know and that ties this all much more neatly. Here it is:

Each part of the striatum receives inputs from specific regions of the cortex. For example, the dorsolateral striatum gets inputs from motor regions of the cortex; the dorsomedial striatum gets inputs from cognitive parts of the cortex, etc. What’s more, each of these striatal subregions shares a common microcircuit architecture, including separate D1 vs. D2 receptor-bearing neurons, cholinergic interneurons, etc.

It has therefore been proposed, I think quite uncontroversially, that each of these subregions – although not as sharply separated from one another as initially believed – processes a specific type of information that has been sent down from the associated region of the cortex.

Insofar as this ties into the dopamine stuff we’ve learnt about so far, it’s been theorised that dopamine signals essentially provide information about how worthwhile it is to expend specific types of limited resource at any given time.

For example,

In the dorsolateral striatum, which processes motor information, the limited resource in question is the energy required to move. We know that increasing dopamine in this region makes it more likely that an animal will choose to expend effort to move, so this makes sense.

In the dorsomedial striatum, which processes cognitive information, the limited resources are attention and working memory. We know that dopamine, particularly in this region, is important for deciding whether cognitive effort is worthwhile, so this makes sense too.

In the NA, which processes motivational information, the limited resource is time – should I spend my limited time on this or that? We know that dopamine in this region indicates that an effortful, temporally-extended action is worth pursuing, so, again, this makes sense.

All of this also chimes with something else we know about the basal ganglia, a network of nuclei that includes, among other things, the striatum, the VTA/SN, and various regions of the cortex (i.e. all the key dopaminergic circuitry).

The basal ganglia includes two pathways: the direct pathway and the indirect pathway. Though the circuitry isn’t as neat as we might like, it appears that the direct pathway is responsible for selecting a specific action, whereas the indirect pathway is involved in inhibiting all other courses of action.

Here’s a diagram of the basal ganglia’s circuitry (the image on the left was what they initially believed the circuitry looked like; the one on the right is what we now think it looks like):

(Don’t worry if this image makes little sense to you – it’s not overly important for our purposes here. The only thing worth knowing – which isn’t labelled in this diagram – is that the direct pathway is represented by the lines going from the D1 receptor in the striatum box down to the GPi/SNr box, and the indirect pathway is represented by the lines going from the D2 receptor in the Striatum box down to the GPe, STN, and GPi/SNr boxes).

Again, all of this makes intuitive sense:

If a rat decides that it wants to seek out a piece of cheese, then it cannot also lie around and sleep, it cannot also play with its other rat chums, it cannot also drink from a receptacle on the other side of its cage, etc. A definitive course of action has to be taken, which requires the selection of a specific action as well as the inhibition of all other courses of action.

Another interesting thing about this circuitry is that direct pathway neurons in the striatum seem to exclusively contain D1 receptors, whereas indirect pathway neurons in the striatum almost exclusively contain D2 receptors. This is relevant to some stuff I talk about in the appendix – e.g. that in drug and food addicts, D2 receptors are downregulated – but possibly not too interesting for our purposes right here.

So essentially what seems to happen is that, for example, when dopamine levels start to rise, two things occur:

Dopamine binds to D1 receptors in the striatum, which results in motivation, attention, physical energy, etc., getting assigned to a specific course of action.

Dopamine binds to D2 receptors in the striatum, which works to inhibit the assignment of motivation, attention, working memory, physical energy, etc. to other possible options.

To add a bit of graphical representation, recall this diagram from earlier that was used to illustrate dopamine’s role in learning :

It seems that the RPE signal allows us to update the values associated with specific types of actions, whereas the motivational signal works to invigorate specific, high-value courses of action (represented by a thick arrow) when specific states are encountered. Moreover, while all of this is going on, this motivational signal is also inhibiting all of the other, lower-value actions that are not being selected.

So, there you have it.

That’s my best attempt at covering the subject of dopamine.

There’s still lots we don’t know about the dopamine system, but as this review hopefully shows, there’s quite a lot we do now know as well.

I’ve done my best to present the research as I understand it at the moment, but if you feel like I've missed anything or that I've got anything wrong, please do let me know and I’ll do my best to correct any errors.

Appendix

Switching between learning and motivation

As you’ll hopefully know by now, the upshot of this entire post is essentially ‘dopamine is key to both motivation and learning’, but this still begs a further question:

How can dopamine mean both of these things at the same time – especially since we’ve now dismissed the whole ‘tonic=motivation, phasic=learning ’ hypothesis?

We don’t have a watertight explanation here, but there is some interesting speculation (2):

It has been suggested that in order to interpret learning and motivational signals appropriately, dopaminergic circuitry may well switch from one mode to another, and that acetylcholine may play a key role in this process:

At the same time as dopamine cells fire in response to unexpected cues, cholinergic interneurons (CINs) in the striatum pause firing. These pauses could be caused by a number of things, including activation by GABA neurons in the VTA, and it’s been proposed that these pauses may open up something like a learning window for striatal plasticity.

We know that dopamine-dependent plasticity is continually suppressed by muscarinic receptors (which are activated by acetylcholine) in the indirect pathway, so this all makes good sense.

In essence, while these CINs are temporarily deactivated, dopamine has the power to bring about changes in the striatum, allowing learnings to be imprinted into our neural tissue. When these CINs are active, on the other hand, dopamine-dependent plasticity is blocked, meaning dopamine’s role is limited to motivating behaviour.

Aversive and novel stimuli: a couple of other complicating facts

If you’re still with me, you’re probably sick of all the additional layers of complexity that have been built into this picture with each passing section.

Unfortunately, here’s another one for you:

There’s a ton of evidence showing that dopamine neurons not only fire in response to rewarding stimuli, but also in response to novel and even aversive stimuli (11).

Delving deeper, it looks like there are different subpopulations of dopamine neurons in the VTA and SN that encode different things. For example:

Multiple studies have shown that noxious stimuli presented to anaesthetized animals excite some dopamine neurons but inhibit others (11).

Different groups of dopamine neurons have been shown to be phasically excited or inhibited by aversive events, including noxious stimulation of the skin, sensory cues predicting aversive shocks, and sensory cues predicting aversive airpuffs (11).

This may seem to throw everything that we’ve just said into confusion, but there are some smart researchers that have found a way to make sense of this madness (11). They suggest that there are two broad types of dopamine neurons:

Motivational value encoding neurons – these neurons encode the value of an event or stimulus using accurate reward prediction errors. Such neurons are strongly inhibited if a reward is omitted and mildly excited if an aversive event is avoided. These are likely the kinds of neurons Schultz et al were recording in the experiment discussed above.

Motivational salience encoding neurons – these neurons are excited by both rewarding and aversive events. It seems that rather than encode value, they instead encode the motivational salience, i.e. relevance/importance, of an event. These neurons respond when salient events are present, but not when they’re absent.

Motivational value neurons are involved in motivating us to seek out and explore rewarding events – and also in learning to reproduce behaviours that led to rewards in the past.

Motivational salience neurons, on the other hand, allow us to detect, predict, and respond to situations of high importance. They may be involved in orienting our attention and also mustering cognitive control for things like working memory, action selection, etc.

Based on the specific regions of the brain to which these different populations of neurons connect – which I won’t go into here – this account seems quite plausible.

As one tiny and final – I promise – additional layer of complexity, it turns out that the majority of dopamine neurons, including both value- and salience-encoding neurons, show burst responses to several kinds of sensory events that can be thought of as ‘alerting signals’ (11).

These dopamine alerting signals can be triggered by surprising sensory events like an unexpected auditory click or a light flash, and they’ve been shown to produce burst firing in 60-90% of dopamine neurons across the SN and VTA. The size of the alerting signal seems to reflect the extent to which the stimulus is surprising (11).

Bringing this all together, then:

Dopamine neurons often respond to a stimulus with two signals: a fast, alerting signal that encodes whether it is potentially important, and then a second, slower signal that encodes the stimulus’s motivational meaning – either value or salience, depending on the neuron (11).

These findings add detail and texture to the picture presented above, but I don’t think they conflict with it in a way that forces us to update our broad-strokes understanding of what dopamine is or does.

If dopamine doesn't cause pleasure, why are dopamine-spiking drugs like cocaine and amphetamine so pleasant?

While going through this literature, one thing that bothered me was that it seems like drugs that spike dopamine through one mechanism or other – things like cocaine and amphetamine – do actually seem to produce pleasure, despite all of this evidence suggesting that dopamine’s relationship with pleasure is not causative.

What’s up with that?

I’ve come across some sensible discussion on what might be going on here – seems like it could be one of three things (or maybe a combination of them all?):

First, it might just be that a drug like cocaine makes everything in our environment appear more appealing.

I’ve seen speculation that certain forms of anhedonia – including those associated with schizophrenia and depression – aren’t actually reductions in pleasure, i.e. liking, but are instead deficits in motivation, i.e. wanting. In essence, nothing seems appealing. Nothing spikes their dopamine levels. As a result, they can’t bring themselves to do anything.

Being under the influence of cocaine may just be the complete opposite of this. Everything within our environment glitters with promise and appeal. Conversations are more interesting, drinks are more drinkable, cigarettes are more smokable. Everything is exciting, and we want to explore it all.

This experience, while not exactly ‘liking’, is sufficiently positively valenced as to be interpreted by the cocaine user as pleasureful.

Another possibility is that this heightened wanting – and the expanded incentive salience that comes with it – is actually being misinterpreted as pleasure, when in reality it is just empty wanting that has sufficiently disoriented us to distort our interpretation of how we are feeling.

This kind of strikes me as plausible as well.

And the final explanation is simply that dopamine-spiking drugs cause both liking and wanting. This is supported by the fact that drugs like cocaine and amphetamines result in the elevation of endogenous opioid and GABA signals in the NA – neurotransmitters associated with liking rather than wanting – which could very well be producing the sensation of pleasure (6).

Some other interesting facts about dopamine that I've come across in my travels

To tie this off, I'm just going to dump down a load of interesting facts that I’ve come across while doing my research for this piece. Here they are:

Sleep deprivation seems to downregulate D2 receptors. Given that D2 downregulation is associated with hypofrontality in the form of loss of executive function, impulsivity, etc., it's suggested that this may be a factor worth addressing with addiction treatments. For example, if you can sort out an addict's sleep, bump up those D2 receptor numbers, maybe you can bring the prefrontal cortex back into play (13).

VTA dopamine neurons are innervated by parts of the brain associated with maintaining homeostasis, e.g. the hypothalamus. In essence, this means the firing of these VTA neurons can be inhibited or excited based on whether we are hungry, thirsty, etc. This makes sense: if we are hungry, the ability of a Big Mac to motivate us to action should go up – and it does (3).

Using positron emission tomography, researchers found that people who are disposed to experience intrinsically motivated flow states during their daily lives have greater dopamine D2 availability in striatal regions, particularly the putamen (4).

Gyukovics et al found that carriers of a genetic polymorphism that affects striatal D2 receptor availability were more prone to experience flow during study and work related activities (4). Combined with the study above and what I talked about earlier wrt the basal ganglia and the direct/indirect pathway, I can’t help but speculate that this is because people with increased D2 expression may be more capable of inhibiting other behaviours/thoughts, allowing for the heightened focus and singularity of purpose that we associate with flow states.

Addictive drugs cause downregulation of D2 receptors and opioid receptors in the ventral striatum. This may account for both a reduction in motivation and a reduced capacity to feel pleasure (9).

Exercise has been shown to be effective for preventing and treating substance abuses. A rat study showed that exercise seems to reduce the expression of D1 receptors and increase the expression of D2 receptors in certain parts of the striatum (12).

Monkeys expressed a strong preference to view informative visual cues that would allow them to predict the size of a future reward (rather than uninformative cues that provided no reward info). Likewise, dopamine neurons were shown to be excited by the opportunity to view the informative cues in a manner that was correlated with the animal's behavioural preference. This suggests dopamine neurons not only motivate actions to gain rewards but that they also motivate actions to gain information that supports making accurate predictions about those rewards (22).

In macaque monkeys, the same midbrain dopamine neurons that signal the amount of water expected also signal the expectation of information. Data show that single dopamine neurons process both primitive and cognitive rewards (22). Combined with the study above, this might shine a bit of light on why some of us spend so much time reading/learning – it looks like our brains respond to certain types of information in the same way that they respond to more typical rewarding stimuli.

It's known that the anterior cingulate cortex is implicated in encoding whether an action is worth performing in view of the expected benefit and cost of performing the action. Schweimer et al found that a D1 blockade in this part of the brain resulted in rats preferentially choosing a low-reward, low-effort option vs. a high-reward, high-effort option. Conversely, rats with the D2 blocker in this region performed the same as normal rats. Seems D1 receptors in this region are key for motivated behaviour (15).

Lever pressing schedules that have minimal work requirements are largely unaffected by dopamine depletion in the NA, BUT reinforcement schedules that require lots of work are substantially impaired by NA dopamine depletion. Moreover, rats with NA dopamine depletion reallocate their behaviour away from food-reinforced tasks that require lots of energy and towards less-effortful type of food-seeking behaviour. Is this almost the rat equivalent of procrastination (16)?

In non-human primates, D2 receptor numbers were measured with PET scans initially while these animals were housed apart and again when they were housed together. Animals that became dominant showed an increase in D2 receptors in striatum, whereas subordinate animals did not. Dominant animals with higher D2 levels self-administered much less cocaine (17).

They gave rats that had been previously trained to self-administer alcohol a D2 receptor gene to increase D2 expression. Subsequently, self-administration of alcohol massively fell, until D2 receptor levels returned to baseline, at which point alcohol levels returned to where they had been before (18).

References

Quick note about my approach to referencing: this isn't an academic paper, but I am aspiring to something vaguely approaching rigour. As a result, I wanted to include references to support everything I’ve said, but I'm finding the task of doing so quite painful. I'm therefore just going to cite the paper – or the textbook chapter – where I came across a specific fact, so that if you wish to follow up on or confirm anything I've written, you can do so without too much trouble. I realise this is a bit lazy of me, but honestly, this piece is never going to get written if I have to cite every single study precisely, so this is a lesser of two evils approach. Also, apologies for the weird order of references in the text – don’t ask.

1. Pinel and Barnes (2018) – Biospsychology – Chapter 15: Drug use, drug addiction, and the brain’s reward circuits

2. Barker (2018) – What does dopamine mean?

3. Volkow, Wise, and Baler (2017) – The dopamine motive system: implications for drug and food addiction

4. Domenico and Ryan (2017) –The Emerging Neuroscience of Intrinsic Motivation: A New Frontier in Self-Determination Research

5. Berridge and Kringelbach (2008) – Affective neuroscience of pleasure: rewards in humans and animals

6. Berridge and Kringelbach (2015) – Pleasure systems in the brain

7. Volkow, Fowler, and Wang (2003) – The addicted human brain: insights from imaging studies

8. Kandel (2021) – Principles of neural science – chapter 43. motivation, reward, and addictive states

9. Berridge and Dayan (2021) – Liking

10. Berridge et al (2003) – Hyperdopaminergic Mutant Mice Have Higher “Wanting” But Not “Liking” for Sweet Rewards

11. Bromberg-Martin, Matsumoto, and Hikosaka (2010) – Dopamine in motivational control

12. Baik (2013) – Exercise Reduces Dopamine D1R and Increases D2R in Rats: Implications for Addiction

13. Volkow et al (2012) – Evidence That Sleep Deprivation Downregulates Dopamine D2R in Ventral Striatum in the Human Brain

14. Schweimer et al (2006) – Dopamine D1 receptors in the anterior cingulate cortex regulate effort-based decision making

15. Salamone et al (2008) – Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits

16. Schultz and Baez-Mendoza (2013) – The role of the striatum in social behaviour

17. Thanos et al (2001) – Overexpression of dopamine D2 receptors reduces alcohol self-administration

18. Bromberg-Martin and Hikosaka (2009) – Midbrain Dopamine Neurons Signal Preference for Advance Information about Upcoming Rewards

Really excellent post. Brings together a lot of what I have seen concerning the role of dopamine in substance addiction and reward. Very well presented and thought through as much of it has seemed contradictory to my amateur understanding. It does seem that the basic model currently incorporates the findings of Volkow, Koob, and Berridge/Robinson as ongoing processes contributing to the addiction cycle.

Sadly the recovery community is inundated by pop science promising to “boost your dopamine” with supplements and sunshine or dismissing neurobiology and the disease model altogether writing it off as normal adaptation to stressors and emotional pain.

I was wondering if it would be OK to post a link to this page on my website sobersynthesis.com it is non commercial and just a hobby I started with the goal of providing information to the recovery community.

This is a really excellent synthesis and summary of the research literature, Frazer. So glad you highlighted Kent Berridge’s work. I thought that he and his collaborators had clearly shown that dopamine instantiated wanting vs liking through a brilliant body of research, but it took years for that to be widely accepted in the field. And even today, I come across many references to “dopamine highs” and dopamine as the pleasure chemical- not only in the press but in articles written by neuroscientists who surely ought to know better. (Sent an “oh come on, now!” comment in response to a post here on Substack written by a neuroscientist who had implied that dopamine underlies pleasure). Hope your post will be widely read. I will post a note pointing to it. Keep up the good work!